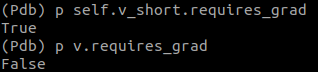

Because a nn.Parameters is not a leaf node. The other ones are constants (buffers inside a nn.Module). In fact you don’t reall need to use a nn.Module. You can optimize a tensor directly.

import torch

cte1 = torch.rand(5, 3).requires_grad_(False)

cte2 = torch.rand(5, 5).requires_grad_(False)

tensor = torch.ones(3, 5).requires_grad_()

optim = torch.optim.SGD([tensor], lr=1)

print(f'Initial tensor'

f'{tensor}')

for i in range(5):

optim.zero_grad()

print(f'Iteration {i}')

output = cte1 @ tensor + cte2

print(f'Requires grad? {output.requires_grad}')

output.sum().backward()

print(f'Tensor gradients \n'

f' {tensor.grad}')

optim.step()

print(f'Tensors \n'

f' {tensor}')

Initial tensortensor([[1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1.]], requires_grad=True)

Iteration 0

Requires grad? True

Tensor gradients

tensor([[1.4718, 1.4718, 1.4718, 1.4718, 1.4718],

[1.7690, 1.7690, 1.7690, 1.7690, 1.7690],

[2.1010, 2.1010, 2.1010, 2.1010, 2.1010]])

Tensors

tensor([[-0.4718, -0.4718, -0.4718, -0.4718, -0.4718],

[-0.7690, -0.7690, -0.7690, -0.7690, -0.7690],

[-1.1010, -1.1010, -1.1010, -1.1010, -1.1010]], requires_grad=True)

Iteration 1

Requires grad? True

Tensor gradients

tensor([[1.4718, 1.4718, 1.4718, 1.4718, 1.4718],

[1.7690, 1.7690, 1.7690, 1.7690, 1.7690],

[2.1010, 2.1010, 2.1010, 2.1010, 2.1010]])

Tensors

tensor([[-1.9435, -1.9435, -1.9435, -1.9435, -1.9435],

[-2.5379, -2.5379, -2.5379, -2.5379, -2.5379],

[-3.2019, -3.2019, -3.2019, -3.2019, -3.2019]], requires_grad=True)

Iteration 2

Requires grad? True

Tensor gradients

tensor([[1.4718, 1.4718, 1.4718, 1.4718, 1.4718],

[1.7690, 1.7690, 1.7690, 1.7690, 1.7690],

[2.1010, 2.1010, 2.1010, 2.1010, 2.1010]])

Tensors

tensor([[-3.4153, -3.4153, -3.4153, -3.4153, -3.4153],

[-4.3069, -4.3069, -4.3069, -4.3069, -4.3069],

[-5.3029, -5.3029, -5.3029, -5.3029, -5.3029]], requires_grad=True)

Iteration 3

Requires grad? True

Tensor gradients

tensor([[1.4718, 1.4718, 1.4718, 1.4718, 1.4718],

[1.7690, 1.7690, 1.7690, 1.7690, 1.7690],

[2.1010, 2.1010, 2.1010, 2.1010, 2.1010]])

Tensors

tensor([[-4.8871, -4.8871, -4.8871, -4.8871, -4.8871],

[-6.0759, -6.0759, -6.0759, -6.0759, -6.0759],

[-7.4039, -7.4039, -7.4039, -7.4039, -7.4039]], requires_grad=True)

Iteration 4

Requires grad? True

Tensor gradients

tensor([[1.4718, 1.4718, 1.4718, 1.4718, 1.4718],

[1.7690, 1.7690, 1.7690, 1.7690, 1.7690],

[2.1010, 2.1010, 2.1010, 2.1010, 2.1010]])

Tensors

tensor([[-6.3588, -6.3588, -6.3588, -6.3588, -6.3588],

[-7.8448, -7.8448, -7.8448, -7.8448, -7.8448],

[-9.5048, -9.5048, -9.5048, -9.5048, -9.5048]], requires_grad=True)

Process finished with exit code 0