Hello,

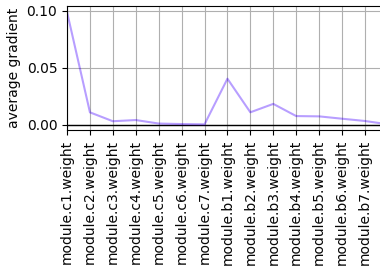

In my classification project, I followed to check the gradient flow with help of answers from Check gradient flow in network - #7 by RoshanRane

the network structure is -

CNN layers - [c1-c7]

batch-normalization layer [ b1-b7]

the relu activation function is in between batch-normalization and cnn layers

for analysing the gradient flow, I plotted this layers only,

c1

b1

c2

b2

c3

b3

c4

b4

c5

b5

c6

b6

c7

b7

then one output_layer[linear layer] which is not in the gradient flow graph

there is no much update in average gradient in the cnn layers [ c5-c7 ] in all the last epochs

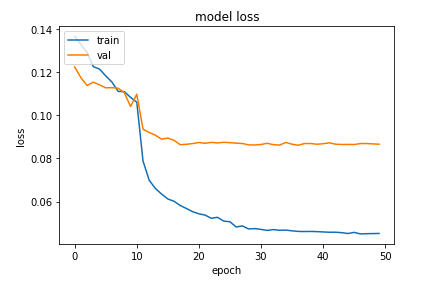

and this is the loss graph for 50 epoch

If the gradient is very small or near to 0 then it means vanishing gradient,

but the vanishing gradient actual definition - The gradient may be so small by the time it reaches layers close to the input of the model that it may have very little effect

In this situation, the gradients are almost zero in the layers[layers near to output layer], and gradient changes in the layers which are near to input layer.

how is it possible and how to solve the vanishing gradient in the layers near to output layer,

thanks