I could not find anywhere how to perform many-to-many classification task in pytorch.

To give details I have a time-series sequence where each timestep is labeled either 0 or 1. For example, if I have input size of [256x64x4]: 256: Batch size, 64: Sequence-length, 4: Feature size (Assume that data is structured batch-first) then the output size is [256x64x1]. I have written the following code for forward-propagation (not sure If I am procedding fine).

class RNNS2S(nn.Module):

"""docstring for RNNS2S"""

def __init__(self, input_dimensions=4, hidden_dimensions=512, num_classes=2, num_layers=2,

dropout=0.0, batch_first=True, bidirectional=False, seq_len=64):

super(RNNS2S, self).__init__()

self.input_dimensions = input_dimensions

self.hidden_dimensions = hidden_dimensions

self.num_classes = num_classes

self.num_layers = num_layers

self.dropout = dropout

self.batch_first = batch_first

self.bidirectional = bidirectional

self.seq_len = seq_len

# GRU Layer

self.gru = nn.GRU(input_size=self.input_dimensions, hidden_size=self.hidden_dimensions,

num_layers=self.num_layers, batch_first=self.batch_first,

dropout=self.dropout, bidirectional=self.bidirectional)

# Fully connected for full seq output i.e. linear layer of fcs.

# This will give me an output of sequence size more precisely: batch_size*seq_len*num_classes

# self.fcs = [] # Can not create array of linear layer like this will give error.

# for i in range(seq_len):

# self.fcs[i] = nn.Linear(in_features=self.hidden_dimensions, out_features=self.num_classes)

self.fc = nn.Linear(in_features=self.hidden_dimensions, out_features=self.num_classes)

def forward(self, X):

assert len(X.size()) == 3, '[GRU]: Input dimension must be of length 3 i.e. [MxSxN]' # M: Batch Size(if batch first), S: Seq Lenght, N: Number of features

batch_index = 0 if self.batch_first else 1

num_direction = 2 if self.bidirectional else 1

# Hidden state in first seq of the GRU

h_0 = Variable(self.num_layers * num_direction, torch.zeros(X.size(batch_index), self.hidden_dimensions))

output_gru, h_n = self.gru(X, h_0)

# Note that it is batch-first

# Now each individual in the sequence will have prediction label.

# fc_output = [] # Can not store the output like this. It is an error.

# for i in range(self.seq_len):

# fc_output[i] = self.fcs[i](output_gru[:, i, :])

fc_output = [self.fcs[i](output_gru[:, i, :]) for i in range(self.seq_len)]

return fc_output # output will be batch_size*num_classes for each entry

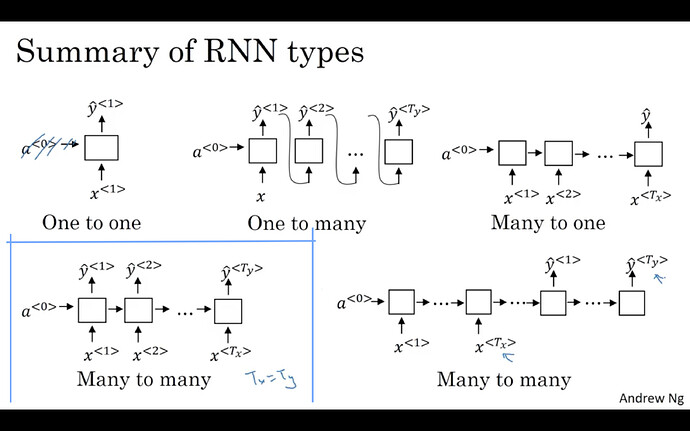

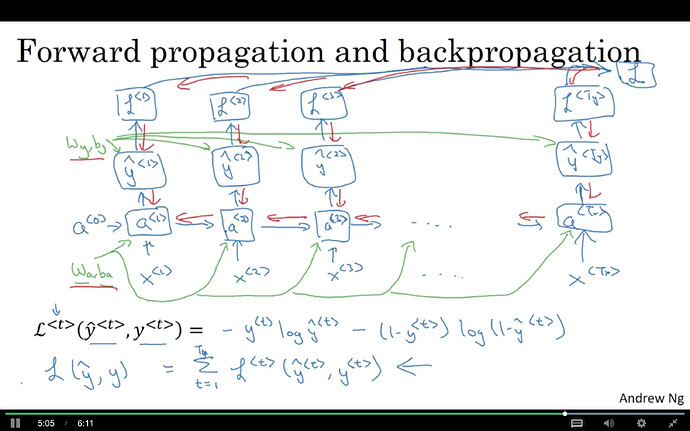

Assuming that above is correct how do I write loss function which sums over the cross-entropy of each time-step in sequence (as can be more clear from the following figure) and then take the average over batch-size. Moreover according to the following figure:

We can see that the weight w_y and b_y should be shared in the final layer.

My overall doubt is writing the loss function according to the figure and making sure that weight is shared (Regarding weight sharing I am sure that this is a requirement. I know that in the input layer it should be shared but not sure about the output layer). I don’t know if it is already in the framework or I have to find a way around it.

Any help will be highly appreciated.

Thank you.