Is it possible to run a pre-trained Style model that was made in Tensorflow and have it be ported over to PyTorch to generate images?

Thank you so much! So there should be no problem in using a pre-trained model that was made in TF (.pkl file) for this?

In the notebook, it says

For completeness, our conversion is below, but you can skip it if you download the PyTorch-ready weights.

so you can use weights from TF, but there are some conversion steps. @ptrblck and I put the steps we took for the original authors’ weights in the notebook to help you.

Best regards

Thomas

Gotcha. Thank you! I got it to work; however, the results are pretty lackluster. Should I play around with some of the parameters? (Note: I’m running on CPU mode, should I switch to a GPU ?)

Output below (I tried running it a few times and got similar blurry results) :

Which weights are you using?

The ones in the reference code for now (same as the link above, Nvidia implementation):

karras2019stylegan-ffhq-1024x1024.pkl

Are you running the notebook with these weights and get this output?

If so, did you change any hyperparameters or anything else in the models?

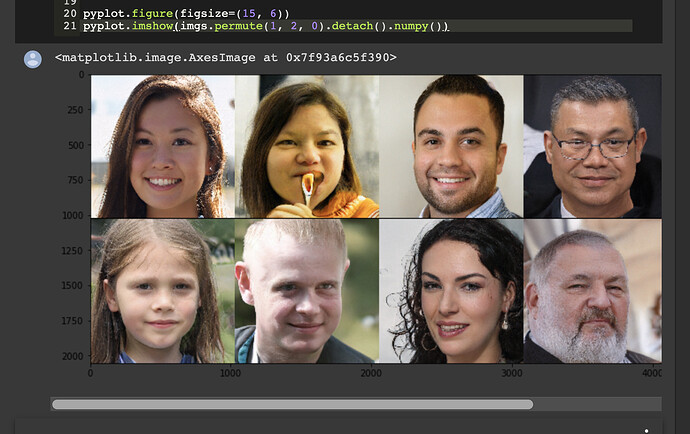

The outputs should look like the last two figures in the notebook.

Could you tell me which PyTorch version you are using so that we could debug it?

Yeah the exact same code as above minus the interpolation part at the very end.

My PyTorch version is on 1.1 (more exactly ‘1.1.0a0+fdaa77a’) and torchvision is on version 0.2.2

Thanks, I’ll try to reproduce this issue.

Hmmm, I tried running it on Google Colab (PyTorch Version 1.0.1) with its 1 GPU and it seems to work there.

Not sure why it’s producing those blobs on my local (CPU only)

Thank you so much - this is very well explained and easy to use. Correct me if I’m wrong - but the weights have been converted for the generator only and not for the discriminator?

Yes, the notebook doesn’t contain the discriminator implementation.

Well, I did eventually convert the discriminator, too, but never got around to putting it up. If it’s of help, I can put up my (very raw) notebook for that, too.

Yes, that’d be great!

Here, but it’s literally just the discriminator model.

Tom,

First off, great work, and thank you for sharing. I’ve trained a transfer learned model from the NVIDIA FFHQ model in TensorFlow. Using your code to load this transfer learned model, it produces the appropriate images, but the images have a muted dynamic range/strange color space. Effectively, they look exactly like the images the NVIDIA model produces, except there is a pinkish/gray tinge to them. Therefore, the network output is not the same between the two models. Unfortunately, I can’t share examples of the phenomena.

It sounds a bit like you have different parameters. We specialized to / hardcoded the parameters of the default model. You’d be able to modify the function to better reflect your output characteristics.

Best regards

Thomas

Thanks for the reply Tom. Do you know specifically where I should start with these modifications? I believed I was using the default configuration of the StyleGAN repo, and assumed that only the parameters values would change, after transfer learning (rather than the the operations or the weight/bias dimensions). One other issue I was concerned about was this bug in the StyleGAN repo.

Is it possible this would impact how the image is postprocessed?

Just in case anyone runs into the issue I described. The error was related to the fmap_decay parameter. I was trying to convert a StyleGAN-Tensorflow trained model that had been checkpointed halfway through the training of the 1024x1024 LOD (level of detail). As a result the alpha/fmap_decay parameter for the upscaling layer was only @~0.5. That led to me getting strange images, because the code posted here assumes an fmap_decay of 1.0 (which is appropriate for a fully trained LOD). If you plan on using a model that isn’t fully trained/checkpointed at the end of an LOD, try tuning this parameter so that you can get the same quality of image that you are seeing output by the tensorflow model.