Hi:

I am trying to run quantization on a model. The model I am using is the pretrained restnet18:

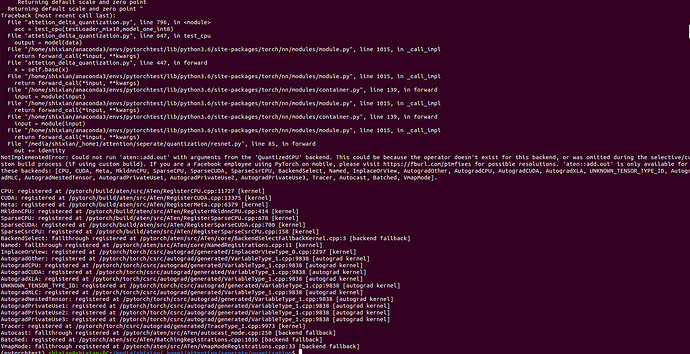

I am using the quantization aware training. I run into a similar problem like https://discuss.pytorch.org/t/runtimeerror-could-not-run-aten-add-tensor-with-arguments-from-the-quantizedcpu-backend/110039. The code is running on GPU first. Before quantization, I transfer it from GPU to CPU and quantize it. It seems like the quantization is working. The problem arises when the quantized model is called later in the code to run the tester.

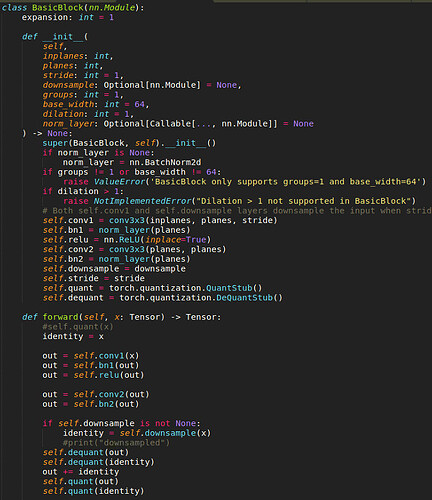

However, I tried the solution by adding the dequant() and quant() around that ‘aten::add_.Tensor’

as

I still got errors Could not run ‘aten::add_.Tensor’ with arguments from the ‘QuantizedCPU’ backend

I even tried to use, however, I still has this error

out = torch.nn.quantized.modules.FloatFunctional().add(out,identity)

Here is my model:

class Resnet18_ONE(nn.Module):

def __init__(self):

super(Resnet18_ONE,self).__init__()

#self.loss = loss

self.quant = torch.quantization.QuantStub()

resnet18_tmp = resnet.resnet18(pretrained=True)

#set_parameter_requires_grad(resnet18,True)

num_ftrs = resnet18_tmp.fc.in_features

#num_classes = superclasses

self.base= nn.Sequential(*list(resnet18_tmp.children())[:-1])

#print(self.base)

self.linear_sub = nn.Linear(num_ftrs, superclasses)

self.linear_bird = nn.Linear(num_ftrs, classes_bird)

self.linear_boat = nn.Linear(num_ftrs, classes_boat)

self.linear_car = nn.Linear(num_ftrs, classes_car)

self.linear_cat = nn.Linear(num_ftrs, classes_cat)

self.linear_fungus = nn.Linear(num_ftrs, classes_fungus)

self.linear_insect = nn.Linear(num_ftrs, classes_insect)

self.linear_monkey = nn.Linear(num_ftrs, classes_monkey)

self.linear_truck = nn.Linear(num_ftrs, classes_truck)

self.linear_dog = nn.Linear(num_ftrs, classes_dog)

self.linear_fruit = nn.Linear(num_ftrs, classes_fruit)

self.dequant = torch.quantization.DeQuantStub()

def forward(self,x):

x = self.quant(x)

x = self.base(x)

x = self.dequant(x)

x = torch.flatten(x, 1)

if task == 'SUB':

x = self.linear_sub(x)

elif task == 'BIRD':

#print("I am in bird")

x = self.linear_bird(x)

elif task == 'BOAT':

x = self.linear_boat(x)

elif task == 'CAR':

x = self.linear_car(x)

elif task == 'CAT':

x = self.linear_cat(x)

elif task == 'FUNGUS':

x = self.linear_fungus(x)

elif task == 'INSECT':

x = self.linear_insect(x)

elif task == 'MONKEY':

x = self.linear_monkey(x)

elif task == 'TRUCK':

x = self.linear_truck(x)

elif task == 'DOG':

x = self.linear_dog(x)

else:

#print("enter fruit")

x = self.linear_fruit(x)

return x

Here is the setting for quantization aware training

model_one.train()

model_one.qconfig = torch.quantization.get_default_qat_qconfig(‘fbgemm’)

model_one_fused = torch.quantization.fuse_modules(model_one,[[‘base.0’,‘base.1’],[‘base.4.0.conv1’,‘base.4.0.bn1’],[‘base.4.0.conv2’,‘base.4.0.bn2’],[‘base.4.1.conv1’,‘base.4.1.bn1’],[‘base.4.1.conv2’,‘base.4.1.bn2’],[‘base.5.0.conv1’,‘base.5.0.bn1’],[‘base.5.0.conv2’,‘base.5.0.bn2’],[‘base.5.1.conv1’,‘base.5.1.bn1’],[‘base.5.1.conv2’,‘base.5.1.bn2’],[‘base.6.0.conv1’,‘base.6.0.bn1’],[‘base.6.0.conv2’,‘base.6.0.bn2’],[‘base.6.1.conv1’,‘base.6.1.bn1’],[‘base.6.1.conv2’,‘base.6.1.bn2’],[‘base.7.0.conv1’,‘base.7.0.bn1’],[‘base.7.0.conv2’,‘base.7.0.bn2’],[‘base.7.1.conv1’,‘base.7.1.bn1’],[‘base.7.1.conv2’,‘base.7.1.bn2’]])

model_one_prepared = torch.quantization.prepare(model_one_fused)

torch.quantization.prepare_qat(model_one_prepared,inplace = True)

Here is where I convert my model and test it:

model_one_prepared.to(‘cpu’)

model_one_int8 = torch.quantization.convert(model_one_prepared.eval(),inplace=False)

acc = test_cpu(testLoader_mix10,model_one_int8)