@ptrblck I finally got to test a bit more. Using different batch sizes worked for a while but now I changed input data and it pretty much fails with all batch sizes that I have tried.

CUDA_LAUNCH_BLOCKING=1 didn’t do anything, allocated some memory on the GPU but then somehow got stuck and didn’t react on any keyboard interrupts.

Defaults for this optimization level are:

enabled : True

opt_level : O1

cast_model_type : None

patch_torch_functions : True

keep_batchnorm_fp32 : None

master_weights : None

loss_scale : dynamic

Processing user overrides (additional kwargs that are not None)...

After processing overrides, optimization options are:

enabled : True

opt_level : O1

cast_model_type : None

patch_torch_functions : True

keep_batchnorm_fp32 : None

master_weights : None

loss_scale : dynamic

Gradient overflow. Skipping step, reducing loss scale to 32768.0

Train Epoch: 1 [0/111 (0%)] Loss: 197.427246

Gradient overflow. Skipping step, reducing loss scale to 16384.0

Train Epoch: 1 [36/111 (30%)] Loss: 208.496323

Train Epoch: 1 [72/111 (60%)] Loss: 261.294617

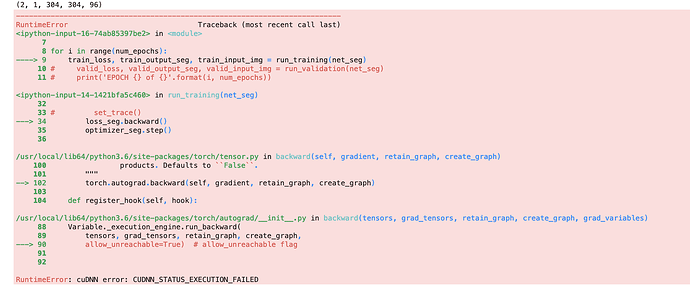

Traceback (most recent call last):

File "/usr/local/lib64/python3.6/site-packages/torch/tensor.py", line 102, in backward

torch.autograd.backward(self, gradient, retain_graph, create_graph)

File "/usr/local/lib64/python3.6/site-packages/torch/autograd/__init__.py", line 90, in backward

allow_unreachable=True) # allow_unreachable flag

RuntimeError: cuDNN error: CUDNN_STATUS_EXECUTION_FAILED

I! CuDNN (v7402) function cudnnGetConvolutionBackwardFilterAlgorithmMaxCount() called:

i! handle: type=cudnnHandle_t; streamId=(nil) (defaultStream);

i! Time: 2019-05-16T16:12:59.272653 (0d+0h+1m+10s since start)

i! Process=12596; Thread=12826; GPU=0; Handle=0x7fa31800ad00; StreamId=(nil) (defaultStream).

I! CuDNN (v7402) function cudnnGetConvolutionBackwardFilterAlgorithmMaxCount() called:

i! handle: type=cudnnHandle_t; streamId=(nil) (defaultStream);

i! Time: 2019-05-16T16:12:59.272662 (0d+0h+1m+10s since start)

i! Process=12596; Thread=12826; GPU=0; Handle=0x7fa31800ad00; StreamId=(nil) (defaultStream).

I! CuDNN (v7402) function cudnnGetConvolutionBackwardFilterWorkspaceSize() called:

i! handle: type=cudnnHandle_t; streamId=(nil) (defaultStream);

i! xDesc: type=cudnnTensorDescriptor_t:

i! dataType: type=cudnnDataType_t; val=CUDNN_DATA_HALF (2);

i! nbDims: type=int; val=5;

i! dimA: type=int; val=[2,16,128,128,64];

i! strideA: type=int; val=[16777216,1048576,8192,64,1];

i! dyDesc: type=cudnnTensorDescriptor_t:

i! dataType: type=cudnnDataType_t; val=CUDNN_DATA_HALF (2);

i! nbDims: type=int; val=5;

i! dimA: type=int; val=[2,16,128,128,64];

i! strideA: type=int; val=[16777216,1048576,8192,64,1];

i! convDesc: type=cudnnConvolutionDescriptor_t:

i! mode: type=cudnnConvolutionMode_t; val=CUDNN_CROSS_CORRELATION (1);

i! dataType: type=cudnnDataType_t; val=CUDNN_DATA_FLOAT (0);

i! mathType: type=cudnnMathType_t; val=CUDNN_TENSOR_OP_MATH (1);

i! arrayLength: type=int; val=3;

i! padA: type=int; val=[1,1,1];

i! strideA: type=int; val=[1,1,1];

i! dilationA: type=int; val=[1,1,1];

i! groupCount: type=int; val=1;

i! dwDesc: type=cudnnFilterDescriptor_t:

i! dataType: type=cudnnDataType_t; val=CUDNN_DATA_HALF (2);

i! vect: type=int; val=0;

i! nbDims: type=int; val=5;

i! dimA: type=int; val=[16,16,3,3,3];

i! format: type=cudnnTensorFormat_t; val=CUDNN_TENSOR_NCHW (0);

i! algo: type=cudnnConvolutionBwdFilterAlgo_t; val=CUDNN_CONVOLUTION_BWD_FILTER_ALGO_1 (1);

i! Time: 2019-05-16T16:12:59.272682 (0d+0h+1m+10s since start)

i! Process=12596; Thread=12826; GPU=0; Handle=0x7fa31800ad00; StreamId=(nil) (defaultStream).

I! CuDNN (v7402) function cudnnConvolutionBackwardFilter() called:

i! handle: type=cudnnHandle_t; streamId=(nil) (defaultStream);

i! alpha: type=CUDNN_DATA_FLOAT; val=1.000000;

i! xDesc: type=cudnnTensorDescriptor_t:

i! dataType: type=cudnnDataType_t; val=CUDNN_DATA_HALF (2);

i! nbDims: type=int; val=5;

i! dimA: type=int; val=[2,16,128,128,64];

i! strideA: type=int; val=[16777216,1048576,8192,64,1];

i! xData: location=dev; addr=0x7f9f38000000;

i! dyDesc: type=cudnnTensorDescriptor_t:

i! dataType: type=cudnnDataType_t; val=CUDNN_DATA_HALF (2);

i! nbDims: type=int; val=5;

i! dimA: type=int; val=[2,16,128,128,64];

i! strideA: type=int; val=[16777216,1048576,8192,64,1];

i! dyData: location=dev; addr=0x7f9f40000000;

i! convDesc: type=cudnnConvolutionDescriptor_t:

i! mode: type=cudnnConvolutionMode_t; val=CUDNN_CROSS_CORRELATION (1);

i! dataType: type=cudnnDataType_t; val=CUDNN_DATA_FLOAT (0);

i! mathType: type=cudnnMathType_t; val=CUDNN_TENSOR_OP_MATH (1);

i! arrayLength: type=int; val=3;

i! padA: type=int; val=[1,1,1];

i! strideA: type=int; val=[1,1,1];

i! dilationA: type=int; val=[1,1,1];

i! groupCount: type=int; val=1;

i! algo: type=cudnnConvolutionBwdFilterAlgo_t; val=CUDNN_CONVOLUTION_BWD_FILTER_ALGO_1 (1);

i! workSpace: location=dev; addr=0x7fa026920000;

i! workSpaceSizeInBytes: type=size_t; val=2754704;

i! beta: type=CUDNN_DATA_FLOAT; val=0.000000;

i! dwDesc: type=cudnnFilterDescriptor_t:

i! dataType: type=cudnnDataType_t; val=CUDNN_DATA_HALF (2);

i! vect: type=int; val=0;

i! nbDims: type=int; val=5;

i! dimA: type=int; val=[16,16,3,3,3];

i! format: type=cudnnTensorFormat_t; val=CUDNN_TENSOR_NCHW (0);

i! dwData: location=dev; addr=0x7fa059ff3000;

i! Time: 2019-05-16T16:12:59.272707 (0d+0h+1m+10s since start)

i! Process=12596; Thread=12826; GPU=0; Handle=0x7fa31800ad00; StreamId=(nil) (defaultStream).

I! CuDNN (v7402) function cudnnDestroyConvolutionDescriptor() called:

i! Time: 2019-05-16T16:12:59.272739 (0d+0h+1m+10s since start)

i! Process=12596; Thread=12826; GPU=NULL; Handle=NULL; StreamId=NULL.

I! CuDNN (v7402) function cudnnDestroyFilterDescriptor() called:

i! Time: 2019-05-16T16:12:59.272749 (0d+0h+1m+10s since start)

i! Process=12596; Thread=12826; GPU=NULL; Handle=NULL; StreamId=NULL.

I! CuDNN (v7402) function cudnnDestroyTensorDescriptor() called:

i! Time: 2019-05-16T16:12:59.272757 (0d+0h+1m+10s since start)

i! Process=12596; Thread=12826; GPU=NULL; Handle=NULL; StreamId=NULL.

I! CuDNN (v7402) function cudnnDestroyTensorDescriptor() called:

i! Time: 2019-05-16T16:12:59.272765 (0d+0h+1m+10s since start)

i! Process=12596; Thread=12826; GPU=NULL; Handle=NULL; StreamId=NULL.

I! CuDNN (v7402) function cudnnSetStream() called:

i! handle: type=cudnnHandle_t; streamId=(nil) (defaultStream);

i! streamId: type=cudaStream_t; streamId=(nil) (defaultStream);

i! Time: 2019-05-16T16:12:59.272774 (0d+0h+1m+10s since start)

i! Process=12596; Thread=12826; GPU=0; Handle=0x7fa31800ad00; StreamId=(nil) (defaultStream).

I! CuDNN (v7402) function cudnnCreateTensorDescriptor() called:

i! Time: 2019-05-16T16:12:59.272789 (0d+0h+1m+10s since start)

i! Process=12596; Thread=12826; GPU=NULL; Handle=NULL; StreamId=NULL.

I! CuDNN (v7402) function cudnnSetTensorNdDescriptor() called:

i! dataType: type=cudnnDataType_t; val=CUDNN_DATA_HALF (2);

i! nbDims: type=int; val=5;

i! dimA: type=int; val=[1,16,1,1,1];

i! strideA: type=int; val=[16,1,1,1,1];

i! Time: 2019-05-16T16:12:59.272799 (0d+0h+1m+10s since start)

i! Process=12596; Thread=12826; GPU=NULL; Handle=NULL; StreamId=NULL.

I! CuDNN (v7402) function cudnnCreateTensorDescriptor() called:

i! Time: 2019-05-16T16:12:59.272808 (0d+0h+1m+10s since start)

i! Process=12596; Thread=12826; GPU=NULL; Handle=NULL; StreamId=NULL.

I! CuDNN (v7402) function cudnnSetTensorNdDescriptor() called:

i! dataType: type=cudnnDataType_t; val=CUDNN_DATA_HALF (2);

i! nbDims: type=int; val=5;

i! dimA: type=int; val=[2,16,128,128,64];

i! strideA: type=int; val=[16777216,1048576,8192,64,1];

i! Time: 2019-05-16T16:12:59.272818 (0d+0h+1m+10s since start)

i! Process=12596; Thread=12826; GPU=NULL; Handle=NULL; StreamId=NULL.

I! CuDNN (v7402) function cudnnConvolutionBackwardBias() called:

i! handle: type=cudnnHandle_t; streamId=(nil) (defaultStream);

i! alpha: type=CUDNN_DATA_FLOAT; val=1.000000;

i! srcDesc: type=cudnnTensorDescriptor_t:

i! dataType: type=cudnnDataType_t; val=CUDNN_DATA_HALF (2);

i! nbDims: type=int; val=5;

i! dimA: type=int; val=[2,16,128,128,64];

i! strideA: type=int; val=[16777216,1048576,8192,64,1];

i! srcData: location=dev; addr=0x7f9f40000000;

i! beta: type=CUDNN_DATA_FLOAT; val=0.000000;

i! destDesc: type=cudnnTensorDescriptor_t:

i! dataType: type=cudnnDataType_t; val=CUDNN_DATA_HALF (2);

i! nbDims: type=int; val=5;

i! dimA: type=int; val=[1,16,1,1,1];

i! strideA: type=int; val=[16,1,1,1,1];

i! destData: location=dev; addr=0x7fa5e0efe200;

i! Time: 2019-05-16T16:12:59.272833 (0d+0h+1m+10s since start)

i! Process=12596; Thread=12826; GPU=0; Handle=0x7fa31800ad00; StreamId=(nil) (defaultStream).

I! CuDNN (v7402) function cudnnDestroyTensorDescriptor() called:

i! Time: 2019-05-16T16:12:59.272849 (0d+0h+1m+10s since start)

i! Process=12596; Thread=12826; GPU=NULL; Handle=NULL; StreamId=NULL.

I! CuDNN (v7402) function cudnnDestroyTensorDescriptor() called:

i! Time: 2019-05-16T16:12:59.272857 (0d+0h+1m+10s since start)

i! Process=12596; Thread=12826; GPU=NULL; Handle=NULL; StreamId=NULL.

I! CuDNN (v7402) function cudnnDestroy() called:

i! Time: 2019-05-16T16:12:59.477687 (0d+0h+1m+10s since start)

i! Process=12596; Thread=12596; GPU=NULL; Handle=NULL; StreamId=NULL.

I! CuDNN (v7402) function cudnnDestroy() called:

i! Time: 2019-05-16T16:12:59.477978 (0d+0h+1m+10s since start)

i! Process=12596; Thread=12596; GPU=NULL; Handle=NULL; StreamId=NULL.

Let me know, if you have any ideas what I can try next.

Thanks a lot,

Christian