Hi,

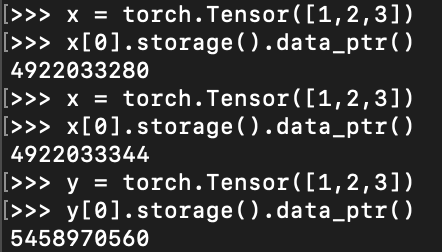

I run the following code in cpu and find that the memory location of the first x[0], the second x[0] and y[0] are different.

I think it is quite strange. If the x and y were python lists, then id() of the first x[0], the second x[0] and y[0] should be the same.

I am looking forward to your help.

ptrblck

2

The created objects are tensors not lists. Based on your code they are not referencing each other so the memory location is expected to be different.

1 Like

Thank you for your early reply.

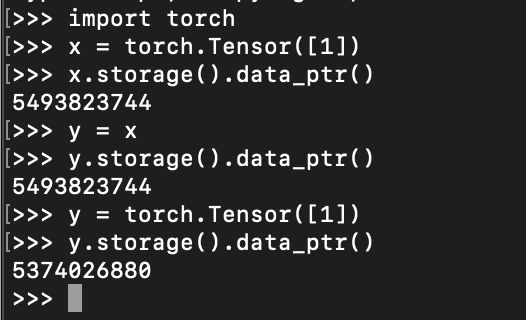

I am new to PyTorch and I’d like to ask one more question. Why does the two y in the followig code have different locations in memory.

The top and bottom y are all torch.Tensor([1]).

ptrblck

4

The first y tensor references x and shows thus the same storage, while the second y tensor holds ow memory and doesn’t reference any other object.