I am facing a similar problem with a post ( How to backpropagate a black box generated cost value - autograd - PyTorch Forums ). However, the blackbox is 90% known in advnace.

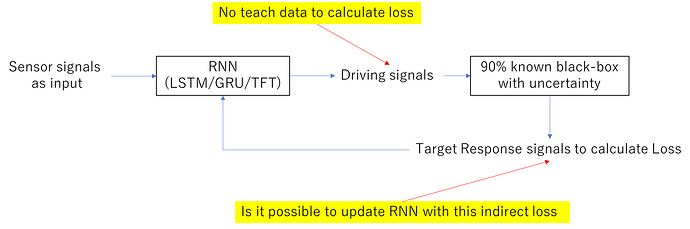

I apply RNN (LSTM/ or GRU or TFT) to generate driving signals, however, there is no label data(target signal) to calculate loss function directly. However, there is one-to-one relationship (pre-known with certain unknown noises) between the driving signals and the response signal. The response signal has target data to generate loss function.

I am wondering to applied decoder (another LSTM to model the relation-ship between driving signal and the response), however, this might increase the complexity (since the relationship between driving signal and the response is 90% known in advance).

Now I am learning the autograd of Pytorch, hoping that the parameters of RNN could be auto-corrected by the indirect loss function. I try to make the response signal to be (requires_grad=True), however, it does not update the model at all, since the loss keeps all the same during training.

Any suggestion?

Best regards.