Hi there, I’m learning pytorch (with VS Code) and facing an odd warning.

If I run my codes without breakpoints, it works just fine. However, when I set some breakpoints and run in debug mode, SOMETIMES this userwaring pops up:

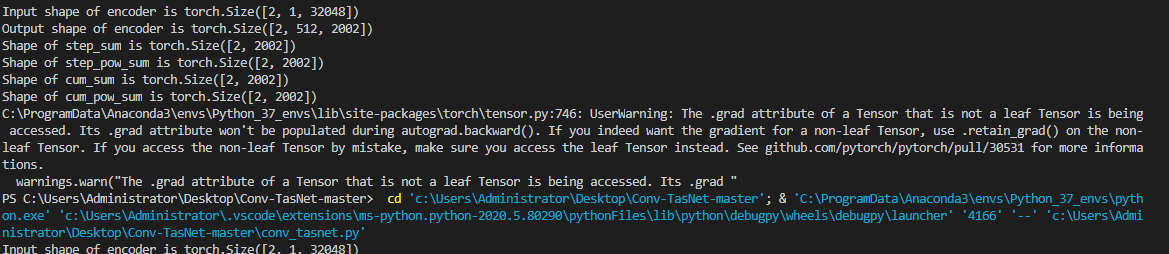

C:\ProgramData\Anaconda3\envs\Python_37_envs\lib\site-packages\torch\tensor.py:746: UserWarning: The .grad attribute of a Tensor that is not a leaf Tensor is being accessed. Its .grad attribute won’t be populated during autograd.backward(). If you indeed want the gradient for a non-leaf Tensor, use .retain_grad() on the non-leaf Tensor. If you access the non-leaf Tensor by mistake, make sure you access the leaf Tensor instead. See github.com/pytorch/pytorch/pull/30531 for more informations.

warnings.warn("The .grad attribute of a Tensor that is not a leaf Tensor is being accessed. Its .grad "

Even if I keep the same code and same breakpoints, the warning pops up occasionally(20% chance, give or take, and it pops up seconds after the breakpoints pause). I found one similar post

but it does not answer my question since there is no .to() operation in my codes.

Any tips on how to solve it? Thanks.

.

.