Hi, Thank you so much for this code! I am a beginner and learning CNN by looking into the examples. It really helps. Can you guide me on how can I visualize the last layer of my model?

If you would like to visualize the parameters of the last layer, you can directly access them e.g. via model.last_layer.weigth and visualize them using e.g. matplotlib.

The activations would most likely be the return value and thus the model output, which can be visualized in a similar fashion. If you want to grab intermediate activations, you could use forward hooks and plot them after the forward pass was executed.

Let me know, if that answers the question.

Hi, thanks as per your comments I have implemented. Can you tell me how can we interpreted the results?

As per your comment I got the weights of fully connected layer 1 and the size is this torch.Size([500, 800])

but I am unable to plot it as getting this error TypeError: Invalid shape (800,) for image data. I think the problem is because fully connected layer is flattened so it is 1-D array therefore I am getting this error? What is the solution for this?

You should be able to visualize a numpy array in the shape [500, 800] using matplotlib in the same way as done in your previous post. I guess you might be indexing the array and could thus try to plot it directly.

I don’t know what kind of information you are looking for but the color map would indicate the value ranges and you could try to interpret them as desired.

(layer1): Sequential(

(0): Conv2d(1, 20, kernel_size=(5, 5), stride=(1, 1))

(1): ReLU()

(2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(layer2): Sequential(

(0): Conv2d(20, 50, kernel_size=(5, 5), stride=(1, 1))

(1): ReLU()

(2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(fc1): Linear(in_features=800, out_features=500, bias=True)

(dropout1): Dropout(p=0.5, inplace=False)

(fc2): Linear(in_features=500, out_features=10, bias=True)

)>

This is my CNN model and whenever I tried to fetch the weights for layer 1 I am getting this error: ModuleAttributeError: ‘Sequential’ object has no attribute ‘weight’

Can you please help me with this error?

You would either have to index the nn.Sequential container and access the weight attribute of the indexed layer directly or access the submodule:

model[0].weight # assuming model is an nn.Sequential container

# or

model.fc1.weight # to access the weight parameter of fc1

Yes, Thanks a lot. My problem gets solved.

output = model(data) where this line of code is using? I did not get it. Can you explain it?

I’m not sure, if you are referring to this post, but if so, this line of code executes the forward pass using the data input tensor and the model.

Yes, I am talking about your referring post. Thank you for your reply.

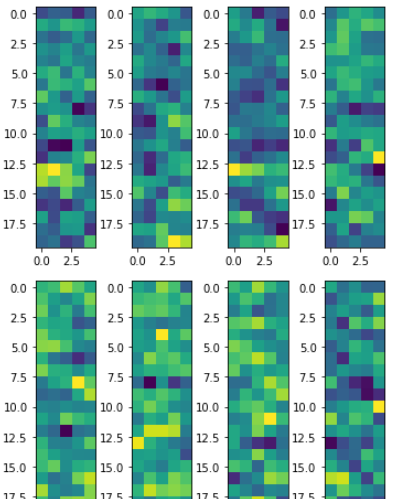

Please consider following code for arranging the maps in four columns

fig, axarr = plt.subplots((act.size(0)//4),4,figsize=(50,50),gridspec_kw={‘wspace’: 0.01, ‘hspace’: 0.01})

#fig, axarr = plt.subplots(min(act.size(0), num_plot))

for idx in range((act.size(0))):

axarr[(idx//4),idx%4].imshow(act[idx],cmap='hot',vmin=0,vmax=1)

#fig.tight_layout()

plt.xticks([])

plt.yticks([])

plt.show()

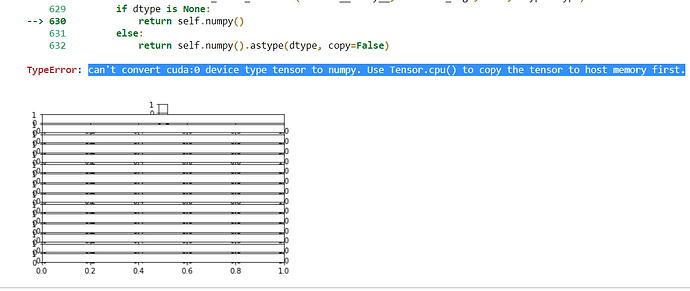

I am trying to visualize the feature map but I am getting “can’t convert cuda:0 device type tensor to numpy. Use Tensor.cpu() to copy the tensor to host memory first.”

testing_file = “data/test_gray.p”

test_dataset = PickledDataset(testing_file, transform=test_data_transforms)

test_loader = WrappedDataLoader(DataLoader(test_dataset, batch_size=16, shuffle=False), to_device)

model=TrafficSignNet()

check_point = torch.load(‘model.pt’, map_location=device)

model.load_state_dict(check_point)

model.cuda()

Visualize feature maps

activation = {}

def get_activation(name):

def hook(model, input, output):

activation[name] = output.detach()

return hook

model.conv3.register_forward_hook(get_activation(‘conv3’))

with torch.no_grad():

data = next(iter(test_loader))[0].to(device)

#data = data.cpu()

#data, _ = dataset[0]

#data.unsqueeze_(0)

output = model(data)

output.cpu().numpy()

act = activation[‘conv3’].squeeze()

fig, axarr = plt.subplots(act.size(0))

for idx in range(act.size(0)):

axarr[idx].imshow(act[idx])

please help me how to solve this error.

Thank you in advance

this the code

error is “can’t convert cuda:0 device type tensor to numpy. Use Tensor.cpu() to copy the tensor to host memory first.”

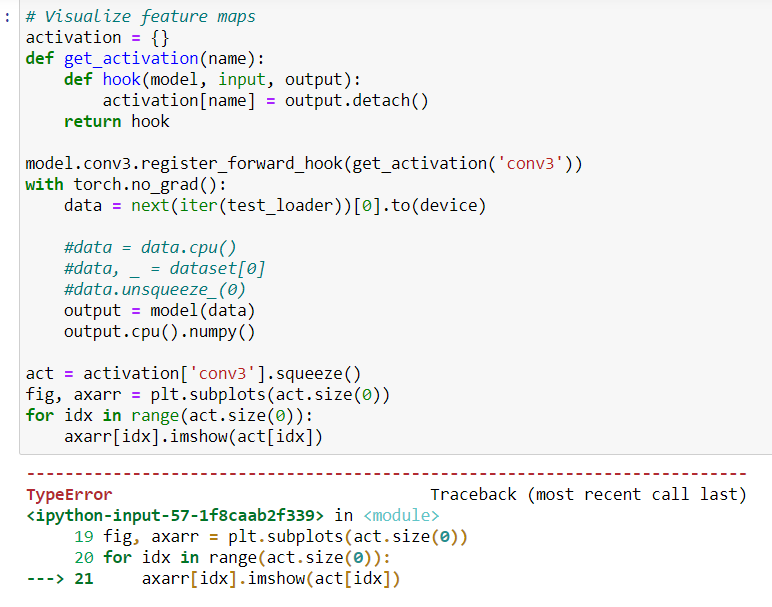

act[idx] seems to be a CUDATensor, while imshow expects a numpy array.

Did you try to use the suggestion from the error message, i.e. transferring the tensor to the CPU first?

axarr[idx].imshow(act[idx].detach().cpu().numpy())

Thank you for your reply

yes, I used this but I am getting "TypeError: Invalid shape (250, 6, 6) for image data"

I am using a pickled dataset

I am not able to figure it out.

plt.imshow expects the numpy array to have a “standard” image format, i.e. either 3 or 1 channel, while your input seems to have 250 channels.

If that’s the case, you could try to visualize each channels as a grayscale image using a for loop.

okay, I will try to do it. Thanks

I am just a beginner and I am not able to give a for loop. please help me with this code

output size =torch.Size([16, 43])

data Size= torch.size([16, 1, 32, 32])

There are 43 classes with 16 images.

Visualize feature maps

activation = {}

def get_activation(name):

def hook(model, input, output):

activation[name] = output.detach()

return hook

model.conv3.register_forward_hook(get_activation(‘conv3’))

with torch.no_grad():

data = next(iter(test_loader))[0].to(device)

#data = data.cpu()

#data, _ = dataset[0]

#data.unsqueeze_(0)

output = model(data)

print(output.shape)

print(data.shape)

act = activation[‘conv3’].squeeze()

fig, axarr = plt.subplots(act.size(0))

for idx in range(act.size(0)):

axarr[idx].imshow(act[idx].detach().cpu().numpy())

Hi there! I am trying to see the activation for my dataset and I want to see if you could help me with the code as in this example, my model looks like this:

class WiFiResNet4(nn.Module):

def __init__(self, n_classes, img_size, input_channels):

super().__init__()

self.layer = nn.Sequential(

nn.Conv2d(input_channels, 32, kernel_size=(5, 5), stride=(1, 1), padding=2, bias=False),

nn.BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True),

nn.Conv2d(32, 32, kernel_size=(1, 1), stride=(1, 1), bias=False),

nn.BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True),

nn.Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False),

nn.BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True),

nn.Conv2d(32, 64, kernel_size=(1, 1), stride=(1, 1), bias=False),

nn.BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True),)

self.fc = nn.Linear(self.fc_input, n_classes)

for m in self.modules():

if isinstance(m, nn.Conv2d):

m.weight.data.normal_(0, 0.01)

def forward(self, x):

out = self.layer(x)

flat = out.view(out.size(0), self.fc_input)

score = self.fc(flat)

return score

thanks a lot!