I think we had a similar discussion before in this topic and forward hooks should also work here.

Wouldn’t that be the Class Activation Map?

The output of a conv2d layer is the set of pixels that ‘activated’ the prediction.

And aren’t feature maps, the ‘kernel’ maps i.e. the filter applied (by each kernel) in order to obtain the CAMs ?

it’s an amazing package, does the job perfectly.

The only issue I had with it (as far as I remember) was the fact that it sort of “unfolds” the models.

E.g. resnet101 has 4 bottleneck layers, which are then “unfolded” and feature maps are extracted for the all of the conv2d layers. Which (if visualising all of them) renders them unreadable (to be fair I was placing them on the tensorboard rather than saving so I guess I shot myself in the foot there)

So one simply has to limit (every N or predefined indices) of layers they desire to visualise.

made it a bit easier for myself to work with it.

We might use different terminology, but I wouldn’t call the filters/weights features.

Anyway, if you want to visualize the filters, you can directly access them via model.layer.weight and plot them using e.g. matplotlib.

You’re right, I looked it up and read around and feature maps and class activation maps seem to be used interchangeably.

I see it now, feature maps are the outputs of each conv2d layer,

is a combination of all these maps and weights!

Hence the Score.

I got it slightly confused.

Thanks for the answer!

I’ve just pushed a change to make this easier.

You can now specify the layer name (you could before but its now working better)

And also the recursion depth (how far into nested sequential blocks it will go)

I.e

fe.set_image("pug.jpg" , allowed_modules = ["bottleneck"])

will only output the bottleneck layers (in the pretraied pytorch model there are actually 30 odd)

or

fe.set_image("pug.jpg", allowed_depth = 0)

will only output these layers

['Conv2d', 'BatchNorm2d', 'ReLU', 'MaxPool2d', 'Sequential (Block)', 'Sequential (Block)', 'Sequential (Block)', 'Sequential (Block)']

You could use both parameters together and do this.

fe.set_image("pug.jpg", allowed_modules=["conv"], allowed_depth = 2)

Hope this helps you somewhat.

Hello,

Has anyone done this (e.g. hook) with C++ and Libtorch?

Thanks

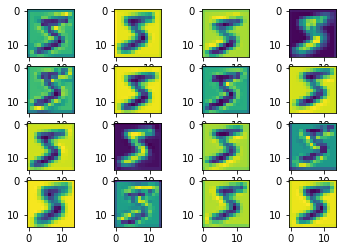

Hope this snippet will help you to plot 16 outputs in the subplot:

fig, axarr = plt.subplots(4, 4)

k = 0

for idx in range(act.size(0)//4):

for idy in range(act.size(0)//4):

axarr[idx, idy].imshow(act[k])

k += 1

For example, if we consider @ptrblck code snippet and change the conv2 layer as 16 feature maps for the visualization, the output could look like:

Hi, I have one doubt in addition to this.

While calculating the loss i.e. nn.CrossEntopyLoss(output,labels) in this, we use output of last layer or final output of the network.

But I want to use feature maps of each convolutional layer in loss calculation as well in addition to above calculation.

Can you please throw some light, how can it be done?

It depends which target you would like to use for the intermediate activations.

Since they are conv outputs you won’t be able to use e.g. nn.CrossEntropyLoss directly since these outputs don’t represent logits for the classification use case as they have a different shape.

However, you could follow a similar approach as seen in Inception models, which create auxiliary outputs forked from intermediate outputs and use these aux. output layers to calculate the loss.

Actually I want to use discriminative loss in addition to cross entropy loss. The discriminative loss will be based on feature maps of each convolutional layers and cross entropy loss remains as usual. But I didn’t find any source so far, how to do it!

You could return the intermediate activations in the forward as seen here:

def forward(self, x):

x1 = self.layer1(x)

x2 = self.layer2(x1)

out = self.layer3(x2)

return out, x1, x2

and then calculate the losses separately:

out, x1, x2 = model(input)

loss = criterion(out, target) + criterion1(x1, target1) + criterion2(x2, target2)

loss.backward()

or alternatively you could use forward hooks to store the intermediate activations and calculate the loss using them. This post gives you an example on how to use forward hooks.

Thank you for the reply @ptrblck.

I actually created a new thread because the discussion deviates slightly and I have few more doubts. Can you please look at this?

If I do something like

Loss = my_Loss (x1, original_images)

for every layer the size of tensor will be different, thus it will give an error.

i.e. after 1 convolutional layer, x1 has a size of [batch_size, num_features, h,w] = [50, 12, 28, 28]

While that of original_images = [50, 3, 32, 32].

So, how to calculate loss then!

And one my more doubt is, can we do something to get all features (num_features=12 in this case) to be as distinct as possible from one another?

You could use additional conv/pooling/interpolation layers to create the same output size of the intermediate activations as the target (or input) tensor.

Alternatively you could also change the model architecture such that the spatial size won’t be changed, but again it depends on your use case.

I don’t know which approach would create activations meeting this requirement.

As far as we provide some kernel size, there will be a reduction in the size.

Size=( (W-K+2P)/ S )+1

I really don’t get your point.

Can you please give dummy examples of both the scenarios you suggested?

Yes, you cannot simply calculate a loss between arbitrarily sized tensors, so you would need to come up with a way to calculate the loss between intermediate activations (with different sizes) and a target.

To get the same spatial size you could e.g. use pooling/conv/interpolations while you would still have to make sure to create the same number of channels.

This can be done via convs again (or via reductions), but it depends on your use case.

Here is a small example:

act = torch.randn(1, 64, 24, 24)

target = torch.randn(1, 3, 28, 28)

# make sure same spatial size

act_interp = F.interpolate(act, (28, 28))

# create same channels

conv = nn.Conv2d(64, 3, 1)

act_interp_channels = conv(act_interp)

print(act_interp_channels.shape) # has same shape now

loss = F.mse_loss(act_interp_channels, target)

Thanks for the reply.

This is something new, I will try it.

So, can we say like pooling and interpolate corresponds to downscaling and upscaling?

And in another approach, I have a output tensor(still intermediate activations after each convolutional layer) of say size (batch_size= 100, channels= 32 , 1, 1) and target(which are labels now) of size (batch_size= 100, 1) provided we have no. of classes = 10.

So, for loss calculation i.e. Loss = lossFunc(output, labels), Is there any loss function, which can reduce the dimensionality from 32 to 10 and then calculate the loss?

Or I have to use a conv/linear layer for that and then calculate the loss? Because in this case it adds some more weights, which I want to avoid.

Yes, you could name it like that. Note that you could down- and upscale using an interpolation method.

I assume the shape should be [100, 10]?

If so you could either use a trainable layer (as you’ve already described) or try to reduce the channels using e.g. mean, sum etc.

However, a reduction might be a bit tricky in this use case, as the target size of 10 doesn’t fit nicely into the channel size of 32.

Generally, all operations would be allowed which map 32 values to 10.

ok that looks super cool  can you give some tips, code, steps of how you did it :)?

can you give some tips, code, steps of how you did it :)?