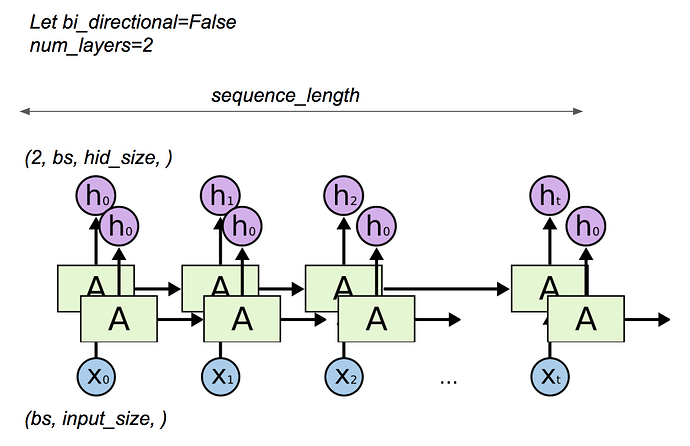

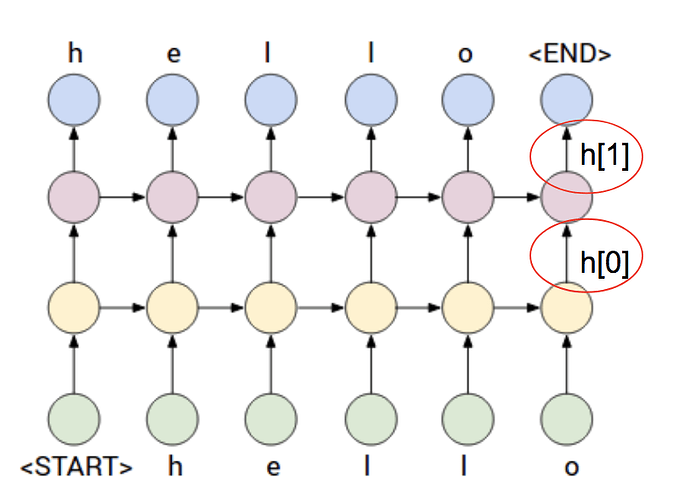

Hi, I am not sure about num_layers in RNN module. To be clarify, could you check whether my understanding is right or not. I uploaded an image when num_layers==2. In my understanding, num_layers is similar to CNN’s out_channels. It is just a RNN layer with different filters (So we can train different weights variable for outputting h ). Right?

I am probably right…

class TestLSTM(nn.Module):

def __init__(self, input_size, hidden_size, num_layers):

super(TestLSTM, self).__init__()

self.rnn = nn.LSTM(input_size, hidden_size, num_layers, batch_first=False)

def forward(self, x, h, c):

out = self.rnn(x, (h, c))

return out

bs = 10

seq_len = 7

input_size = 28

hidden_size = 50

num_layers = 2

test_lstm = TestLSTM(input_size, hidden_size, num_layers)

print(test_lstm)

input = Variable(torch.randn(seq_len, bs, input_size))

h0 = Variable(torch.randn(num_layers, bs, hidden_size))

c0 = Variable(torch.randn(num_layers, bs, hidden_size))

output, h = test_lstm(input, h0, c0)

print('output', output.size())

print('h and c', h[0].size(), h[1].size())

---

TestLSTM (

(rnn): LSTM(28, 50, num_layers=2)

)

output torch.Size([7, 10, 50])

h and c torch.Size([2, 10, 50]) torch.Size([2, 10, 50])