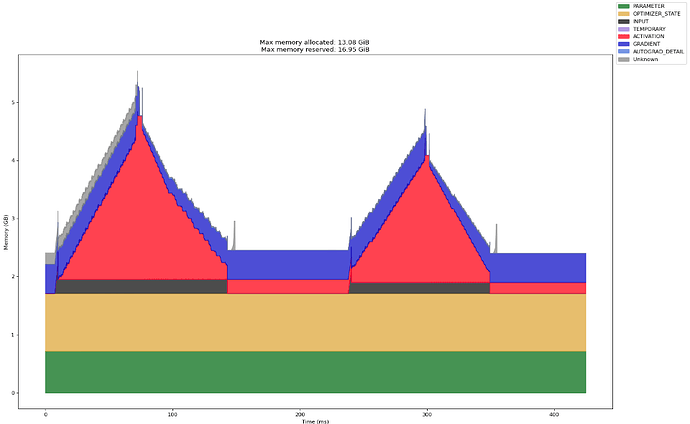

For my understanding, the y axis is the occupied memory. The part of the allocated memory that is actually being used to store data at a given moment — like active tensors, gradients, etc. Instead of frequently asking CUDA to allocate and free memory, the allocated memory store data to be used. If I use

torch.cuda.empty_cache() to delete the cache memory (i.e. the extra part of reserved memory), will it affect the speed?