Hi, I’d like to create a dataloader with different size input images, but don’t know how to do that.

I’ve read the official tutorial on loading custum data (Writing Custom Datasets, DataLoaders and Transforms — PyTorch Tutorials 2.1.1+cu121 documentation), however in the tutorial, all the input images are rescaled to 256x256 and randomly cropped to 224*224.

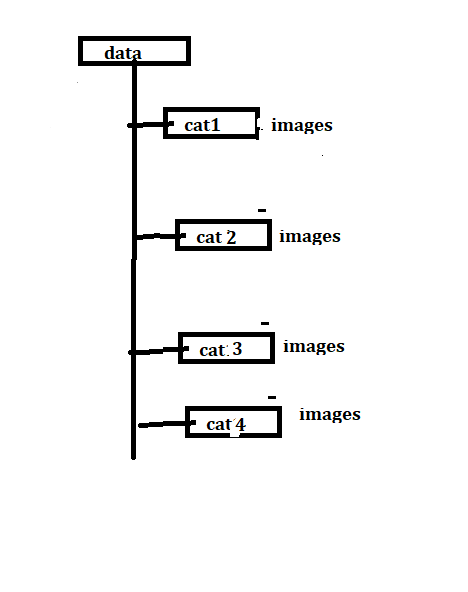

I’d like to do another image classification task, and in my task I want to: keep image their original size, and the batch size should be greater than 1.

I noticed that there exists related questions/issues, such as: [feature request] Support tensors of different sizes as batch elements in DataLoader · Issue #1512 · pytorch/pytorch · GitHub

However, none of above gives exactly implementation details on how to create a variable-input size dataloader.

At the same time, I try to do modification on that dataloader tutorial’s IPython Notebook file. I defined my own dataset class and own ToTensor() function, and only do ToTensor() operation on transform parameter. In the Iterating through the dataset part, it can only show several batch of data, then crash like:

---------------------------------------------------------------------------

RuntimeError Traceback (most recent call last)

in ()

25 plt.title(‘Batch from dataloader’)

26

—> 27 for i_batch, sample_batched in enumerate(dataloader):

28 print(i_batch, sample_batched[‘image’].size(),

29 sample_batched[‘label’].size())

/usr/local/lib/python2.7/dist-packages/torch/utils/data/dataloader.pyc in next(self)

199 self.reorder_dict[idx] = batch

200 continue

→ 201 return self._process_next_batch(batch)

202

203 next = next # Python 2 compatibility

/usr/local/lib/python2.7/dist-packages/torch/utils/data/dataloader.pyc in _process_next_batch(self, batch)

219 self._put_indices()

220 if isinstance(batch, ExceptionWrapper):

→ 221 raise batch.exc_type(batch.exc_msg)

222 return batch

223

RuntimeError: Traceback (most recent call last):

File “/usr/local/lib/python2.7/dist-packages/torch/utils/data/dataloader.py”, line 40, in _worker_loop

samples = collate_fn([dataset[i] for i in batch_indices])

File “/usr/local/lib/python2.7/dist-packages/torch/utils/data/dataloader.py”, line 106, in default_collate

return {key: default_collate([d[key] for d in batch]) for key in batch[0]}

File “/usr/local/lib/python2.7/dist-packages/torch/utils/data/dataloader.py”, line 106, in

return {key: default_collate([d[key] for d in batch]) for key in batch[0]}

File “/usr/local/lib/python2.7/dist-packages/torch/utils/data/dataloader.py”, line 91, in default_collate

return torch.stack(batch, 0, out=out)

File “/usr/local/lib/python2.7/dist-packages/torch/functional.py”, line 66, in stack

return torch.cat(inputs, dim, out=out)

RuntimeError: inconsistent tensor sizes at /pytorch/torch/lib/TH/generic/THTensorMath.c:2709

Any idea? Thanks!