Hi everyone,

I am working on a project where a NN is used as a controller in a classic control problem (the inverted pendulum system). The problem is that the loss of the NN keeps increasing in each epoch. It seems that the NN has learn nothing and I wonder if the problem results from my design of the loss function.

Epoch 1

-------------------------------

loss: 12.320100

loss: 12.357852

loss: 12.433514

loss: 12.547467

loss: 12.700330

loss: 12.892957

loss: 13.126448

loss: 13.402157

loss: 13.721689

loss: 14.086917

loss: 14.499980

loss: 14.963307

loss: 15.479626

loss: 16.051975

loss: 16.683731

loss: 17.378611

loss: 18.140713

loss: 18.974531

loss: 19.884983

loss: 20.877436

loss: 21.957741

loss: 23.132269

loss: 24.407934

loss: 25.792259

loss: 27.293400

loss: 28.920189

loss: 30.682211

loss: 32.589840

loss: 34.654293

loss: 36.887722

loss: 39.303268

loss: 41.915127

loss: 44.738659

loss: 47.790466

loss: 51.088482

loss: 54.652081

loss: 58.502201

loss: 62.661446

loss: 67.154251

loss: 72.007004

loss: 77.248177

loss: 82.908539

loss: 89.021301

loss: 95.622337

loss: 102.750381

loss: 110.447235

loss: 118.758064

loss: 127.731606

loss: 137.420471

loss: 147.881485

loss: 159.175980

loss: 171.370178

loss: 184.535568

loss: 198.749283

loss: 214.094696

loss: 230.661697

loss: 248.547379

loss: 267.856598

loss: 288.702484

loss: 311.207184

loss: 335.502533

loss: 361.730896

loss: 390.045929

loss: 420.613556

loss: 453.612793

loss: 489.236969

loss: 527.694763

loss: 569.211426

loss: 614.030212

loss: 662.413452

The NN takes in the system states as input ( a 4*1 vector representing angle, angular velocity, displacement, and velocity ) and outputs a force F, which is the control signal. F changes the states of the system by x'=Ax+BF and the purpose of the NN is to generate proper F to keep the angle 0.

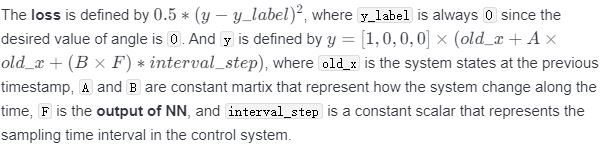

Different from the usual supervised learning, the label value of F is not known. Thus, I design the loss function based on the purpose and the relation between F and the angle.

Another difference is that the online learning style is used to train the NN. In each epoch, the training process is that I start the simulation of the inverted pendulum system, get the system states, input them to the NN, get the output F, calculate the system state at the next timestamp, calculate the loss, and update the weights of the NN. In short, the batchsize is 1.

I will be very appreciated if anyone can help me, Thx.

And my code is below.

import matplotlib.pyplot as plt

import numpy as np

import time

from matplotlib.animation import FuncAnimation

import torch

from torch import nn

from torchviz import make_dot

#------------------------------------------------------------------------- Define model

class NeuralNetwork(nn.Module):

def __init__(self):

super().__init__()

self.linear_relu_stack = nn.Sequential(

nn.Linear(4, 32),

nn.ReLU(),

nn.Linear(32, 8),

nn.ReLU(),

nn.Linear(8, 1)

)

def forward(self, x):

x = x.reshape((1,4))

logits = self.linear_relu_stack(x)

return logits

#-------------------------------------------------------------------------------define simulation and training in each epoch

def train(model, loss_fn, optimizer):

# total simulation time interval /seconds

t_time = 8

# time interval of each step /seconds

interval_step = 0.01

# total simulation loop numbers

loop = int(t_time / interval_step)

# print(loop)

model.train()

A = np.array([[0, 1, 0, 0], [15.244, 0, 0, 0], [0, 0, 0, 1], [-0.363, 0, 0, 0]])

A=torch.tensor(A,dtype=torch.float32)

B = np.array([[0], [-0.741], [0], [0.494]])

B = torch.tensor(B, dtype=torch.float32)

x_init = np.array([[round(np.random.uniform(-1, 1), 3) * 10], [0], [0], [0]])

#print(x_init)

x = x_init

F_list=[]

#start simulation loop

for i in range(loop):

old_x=x

old_x=torch.tensor(old_x,dtype=torch.float32)

#x'=Ax+Bu

F = model(old_x)

F_list.append(F)

interval_step_=torch.tensor(interval_step)

y= ( old_x + (torch.mm(A,old_x)+torch.mm(B,F))*interval_step_ )

x=y.detach().numpy()

y=torch.mm(torch.tensor([ [1,0,0,0] ] , dtype=torch.float32),y)

y_label=torch.tensor([[0.0]],dtype=torch.float32)

loss = loss_fn(y,y_label )

optimizer.zero_grad()

loss.backward()

optimizer.step()

loss= loss.item()

print(f"loss: {loss:>7f}")

if x[0][0]>25 or x[0][0]<-25: #angle bigger than 25 degree, means that the control fails

print("control fail in {} seconds".format(i*interval_step))

break

if __name__=="__main__":

model = NeuralNetwork()

print(model)

loss_fn = nn.MSELoss(reduction='sum')

optimizer = torch.optim.Adam(model.parameters(), lr=0.001)

epochs = 100

for t in range(epochs):

print(f"Epoch {t + 1}\n-------------------------------")

train(model, loss_fn, optimizer)

print("training Done!")

'''torch.save(model.state_dict(), "model-my-simu-pendulum.pth")

print("Saved PyTorch Model State to model-pendulum.pth")'''