Hi @ptrblck

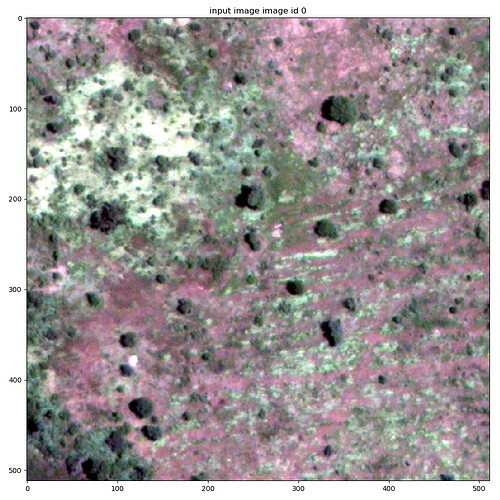

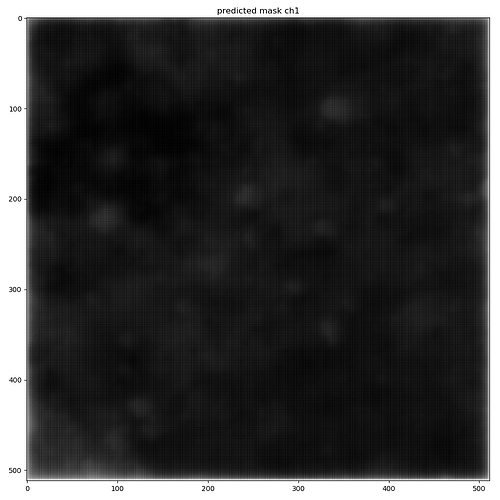

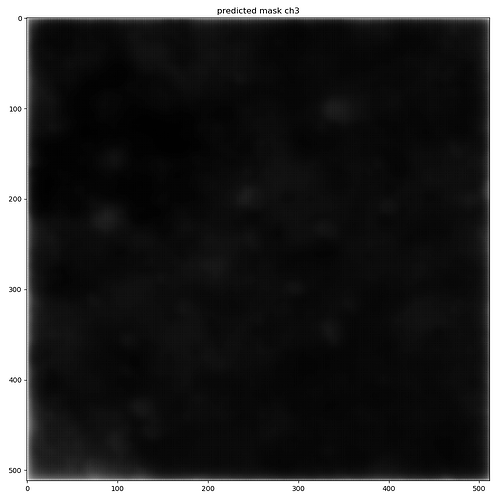

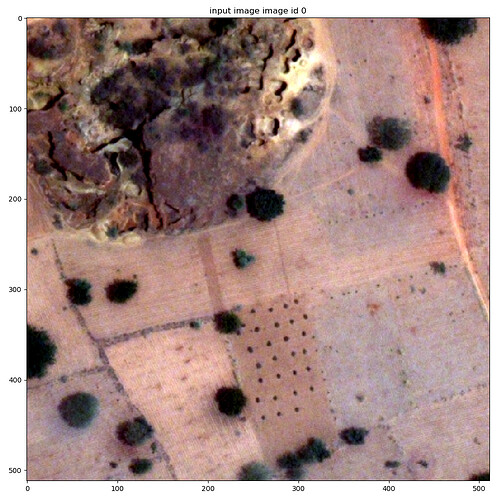

I made the changes to my model, switched to using a 3-ch input and generating a 10-ch mask with BCELoss.

When I try to run the train loop, I get this error for a single batch. I also get the exact same error when I use the U-Net model that you provide in the link.

2018-06-07 00:27:32 INFO | train_unet:train_and_evaluate:182: Epoch 1/10

0% 0/20 [00:00<?, ?it/s]/tool/python/conda/env/gis36/lib/python3.6/site-packages/torch/nn/functional.py:1474: UserWarning: Using a target size (torch.Size([1, 3, 256, 256])) that is different to the input size (torch.Size([1, 10, 256, 256])) is deprecated. Please ensure they have the same size.

"Please ensure they have the same size.".format(target.size(), input.size()))

Traceback (most recent call last):

File "/project/geospatial/application/cs230-sifd/source/main/train/train_unet.py", line 284, in <module>

main()

File "/project/geospatial/application/cs230-sifd/source/main/train/train_unet.py", line 280, in main

restore_file=params.restore_file)

File "/project/geospatial/application/cs230-sifd/source/main/train/train_unet.py", line 190, in train_and_evaluate

params=params)

File "/project/geospatial/application/cs230-sifd/source/main/train/train_unet.py", line 100, in train

loss = loss_fn(output_batch, labels_batch)

File "/tool/python/conda/env/gis36/lib/python3.6/site-packages/torch/nn/modules/module.py", line 491, in __call__

result = self.forward(*input, **kwargs)

File "/tool/python/conda/env/gis36/lib/python3.6/site-packages/torch/nn/modules/loss.py", line 433, in forward

reduce=self.reduce)

File "/tool/python/conda/env/gis36/lib/python3.6/site-packages/torch/nn/functional.py", line 1477, in binary_cross_entropy

"!= input nelement ({})".format(target.nelement(), input.nelement()))

ValueError: Target and input must have the same number of elements. target nelement (196608) != input nelement (655360)

Process finished with exit code 1

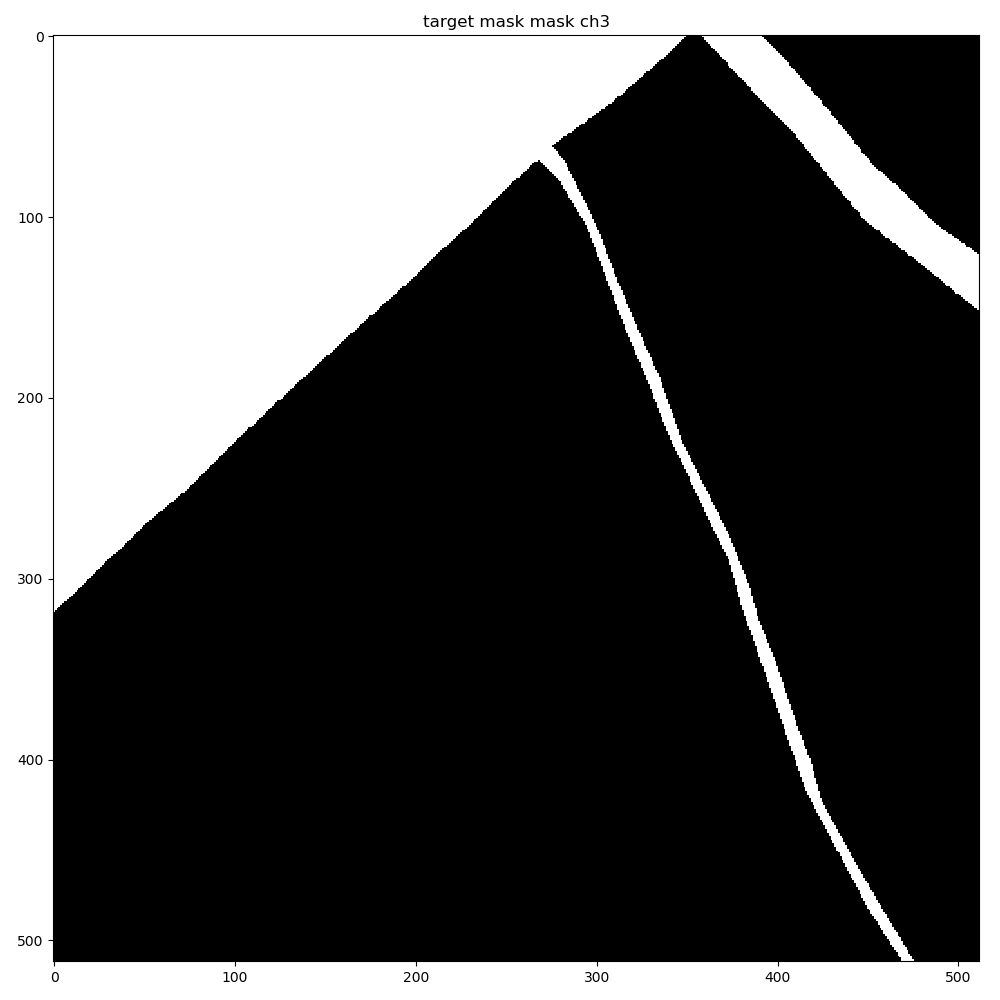

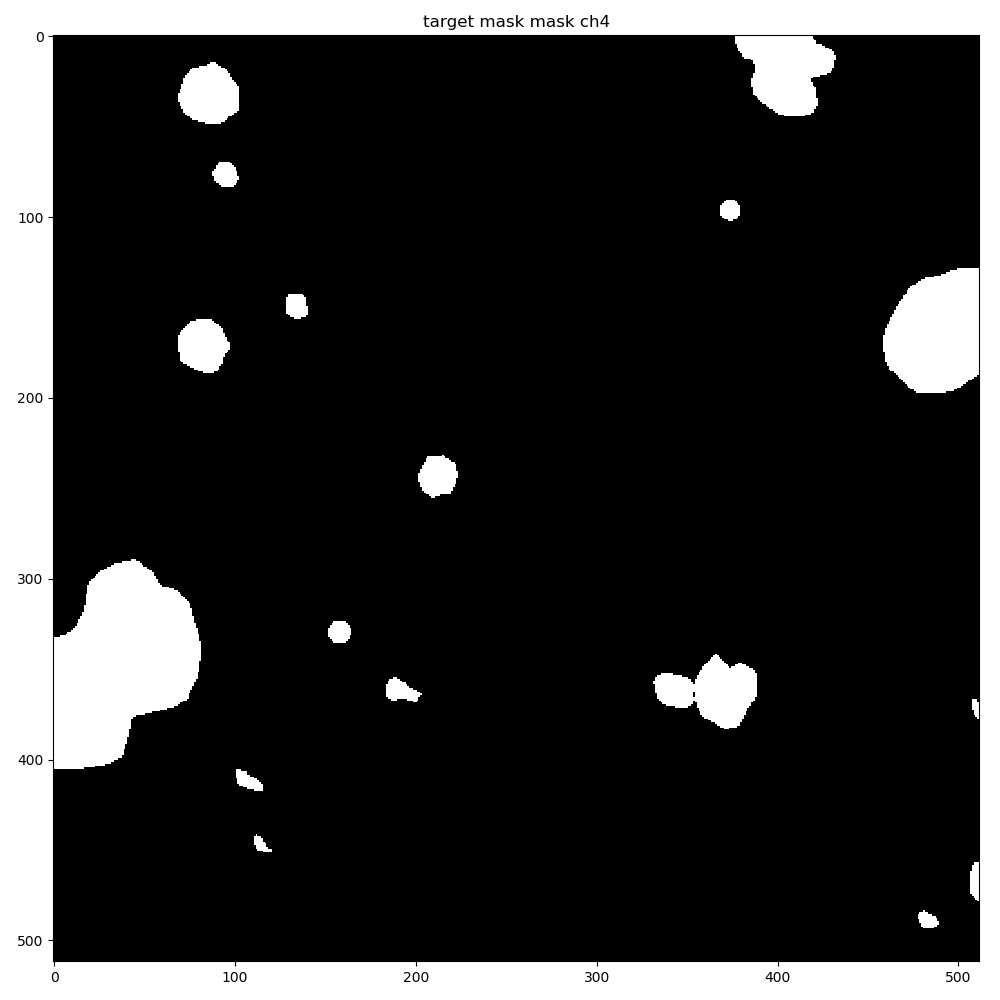

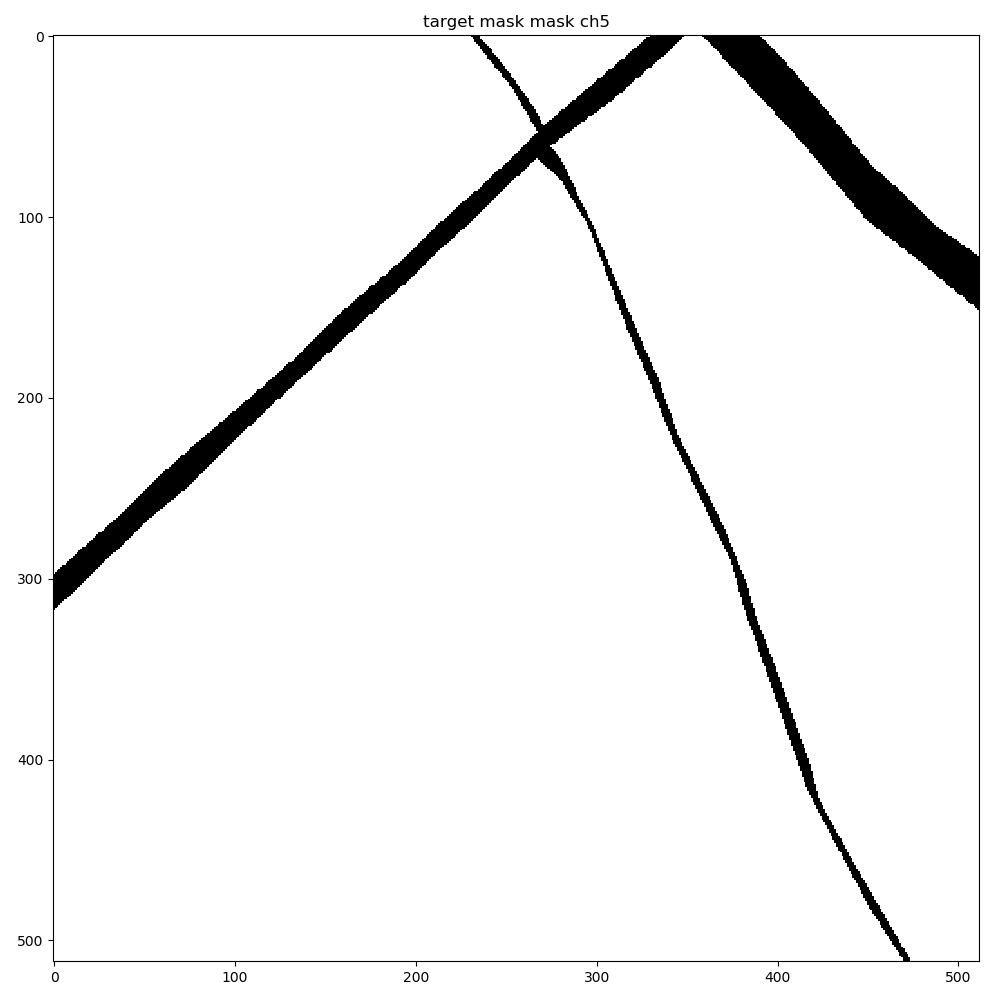

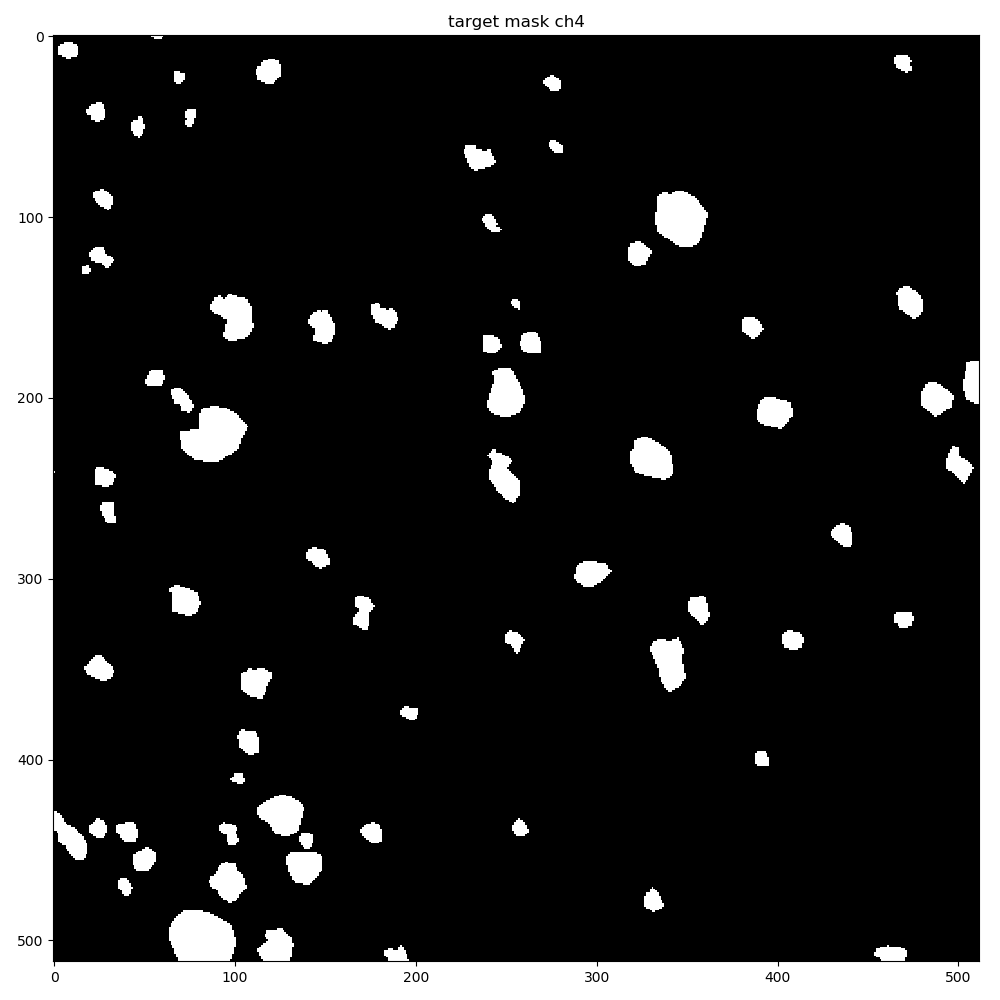

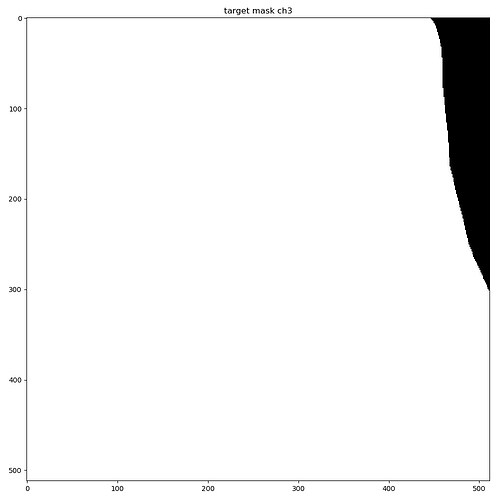

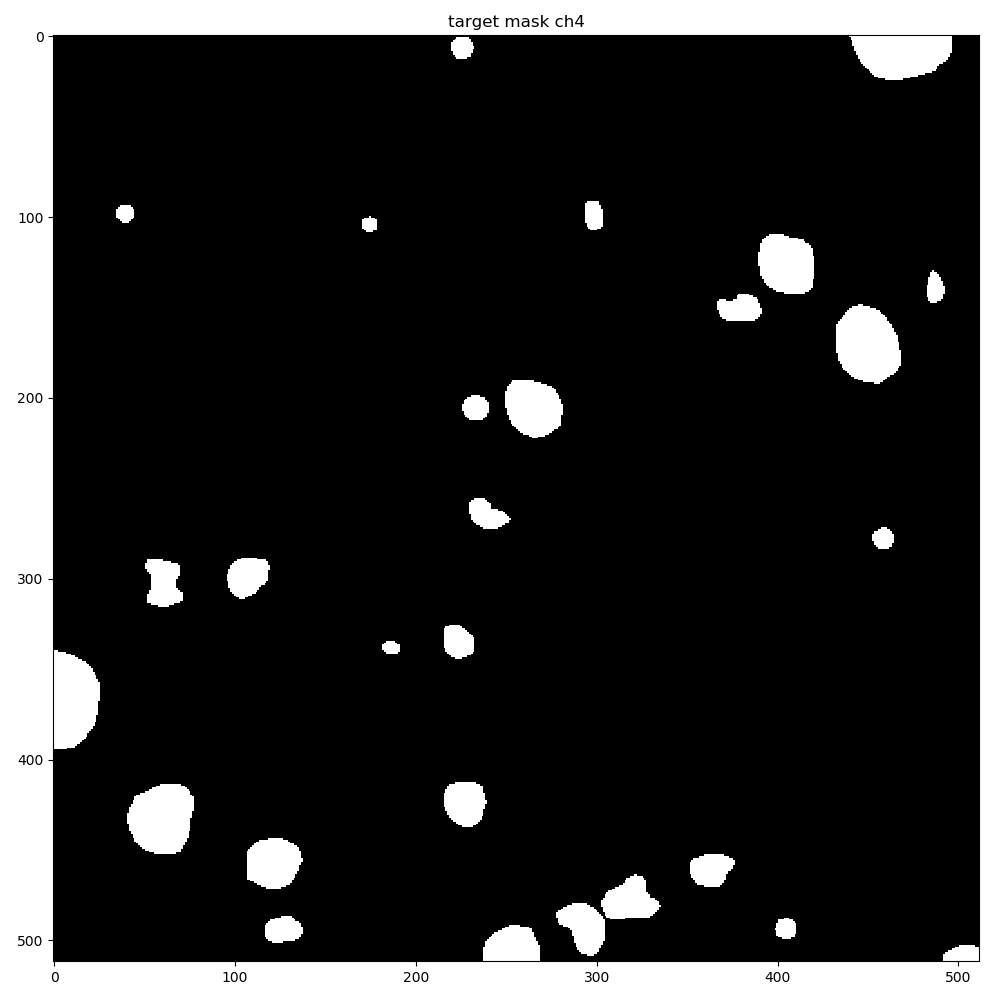

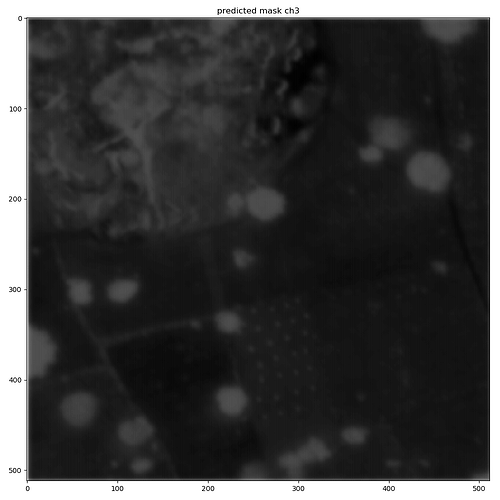

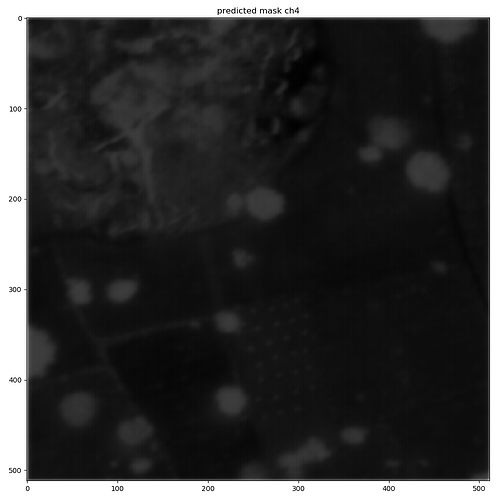

I’m guess it is perhaps because I converted my images and mask to a PIL image, and it has changed the shape of the mask to (3, 256, 256) from the original (10, 256, 256) shape.

This is what I am doing towards the end of my dataset class:

image = torch.from_numpy(image)

image = tvf.to_pil_image(image)

mask = torch.from_numpy(mask)

mask = tvf.to_pil_image(mask)

"""

Apply user-specified transforms to image and mask.

"""

if self.transform:

image, mask = self._transform(image, mask, self.transform)

"""

Sample of our dataset will be dict {'image': image, 'mask': mask}.

This dataset will take an optional argument transform so that any

required processing can be applied on the sample.

"""

sample = {'image': image,

'mask' : mask}

return sample